I’ve recently been tasked to setup a new cPanel system for our sister company Green Light. The system they had was just a bunch of standalone servers hosting cPanel. It was functional, but expanding was difficult, and redundancy was minimal. I decided to setup a Proxmox cluster. Proxmox is a free and open source product that provides a complete virtualization solution.

A brief overview of the system is as follows:

- Three servers hosted on the OVH network

- Using their vRack technology to put the servers on the same LAN

- Proxmox is clustered with Ceph setup for distributed file storage

- A block of 32 IP addresses setup in the vRack

- Multiple cPanel servers also with clustering

- Backups, backups and more backups

Install Proxmox

I’m not going to cover the actual installation of Proxmox, but once you’ve got an account with OVH, setting up a Proxmox server is straight forward, it’s one of the distributions offered in their templates. So go through the steps and get all three servers running.

Before you can network the servers on the vRack, you need to sign up for it. It’s free and only takes a moment to setup. Once that’s done, using the web control panel, add all your servers to the vRack. In my case, this bridged the eth1 interface on each server. You could set IPs directly on the interface, but this won’t work with the block of IPs to share between the servers. You need to create a new bridge and assign the server IPs there.

Create a new Bridge in Proxmox

Login to the Proxmox admin panel on each server, and click on the “Network” tab. Create a new “Linux Bridge” and set the info you desire. I set the IP, subnet mask, Autostart, and most importantly, Bridge ports. The “Bridge ports” needs to bridge to the vRack interface, in my case, eth1.

Once you’ve setup the bridge, and assigned IPs to each server, login via SSH and verify you can ping each node. If you can’t ping them, go back and try again, because you won’t be able to continue if you don’t.

Setting up the Cluster

I’ve been unable to get mulitcast working, but you can test it using the omping command.

Start by creating the cluster on one of the nodes

pve1# pvecm create YOUR-CLUSTER-NAME

If you’re using unicast instead of multicast, I found it easier to edit the file /etc/pve/corosync.conf and add each cluster node, plus add transport: udpu inside the totem block. Once that was done I rebooted the server. When it came back online, set the expected vote count to 1 using the command

pve1# pvecm e 1

Then on each additional node, you need to add it to the cluster

pve2# pvecm add IP-ADDRESS-CLUSTER

pve3# pvecm add IP-ADDRESS-CLUSTER

After you’ve added each node you can check the status using

pve1# pvecm status

Congratulations, you should have a functioning cluster now.

Setting up Ceph

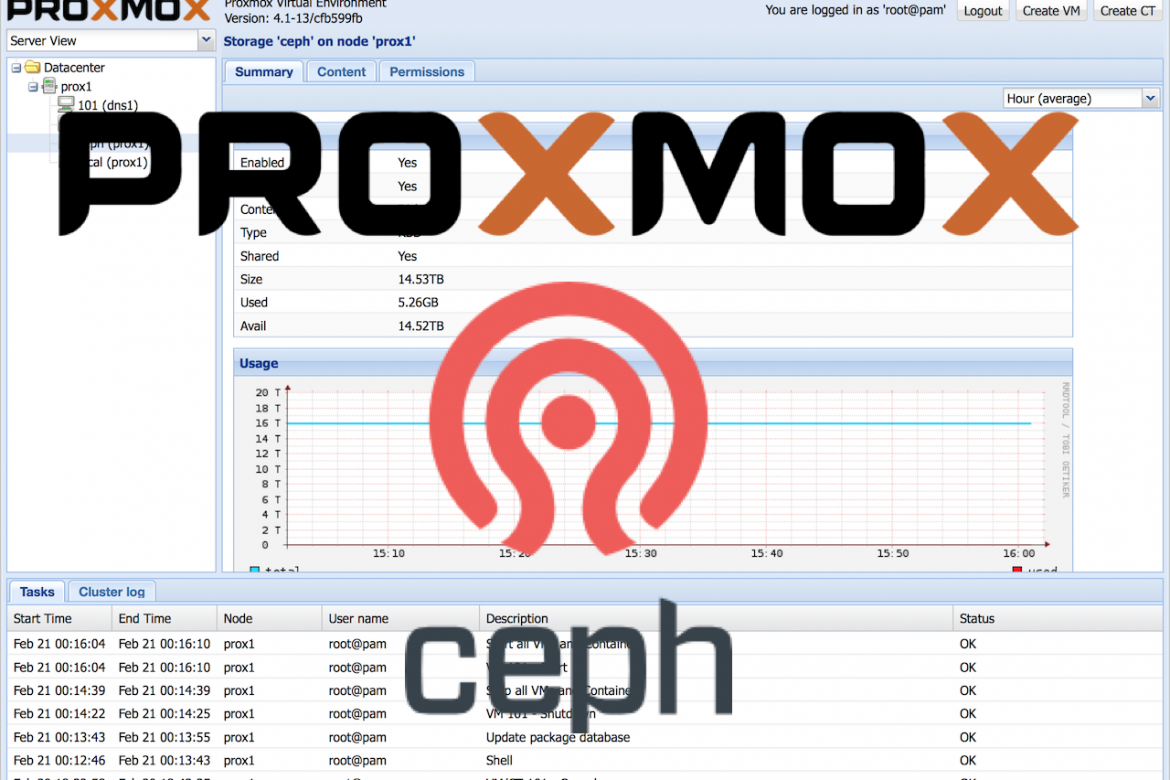

“Ceph is a unified, distributed storage system designed for excellent performance, reliability, and scalability.” It’s kind of like RAID, but spread across servers. In my case, each server contributes two 2TB hard drives, or OSDs, to the Ceph pool, giving me a total of almost 11TB of space.

You start by installing Ceph on each node

pve1# pveceph install -version jewel

pve2# pveceph install -version jewel

pve3# pveceph install -version jewel

Then you need to initialize the network it’s going to use. Since I only have one choice, I’ll be sharing the network with my cluster traffic.

pve1# pveceph init –network 10.10.10.0/24

The last command will create a new config file and distribute it to the rest of the nodes in the cluster. Next, you need to create a monitor. I chose to do this via command line on each server, but once the first one is created you can continue on the GUI if you’d prefer

pve1# pveceph createmon

pve2# pveceph createmon

pve3# pveceph createmon

Create Ceph OSDs

This step is easiest from the GUI. On each node, go to “Ceph/OSD” and click “Create: OSD”. This will popup a box allowing you to select which drives you want to use. Add all the drives and let Ceph do its thing.

References

https://pve.proxmox.com/wiki/Proxmox_VE_4.x_Cluster https://pve.proxmox.com/wiki/Multicast_notes http://pve.proxmox.com/wiki/Multicast_notes#Use_unicast_.28UDPU.29_instead_of_multicast.2C_if_all_else_fails https://pve.proxmox.com/wiki/Separate_Cluster_Network https://forum.proxmox.com/threads/install-ceph-server-on-proxmox-ve-video-tutorial.32289/